TL;DR Summary of The Rise of Context Engineering in AI Models

Optimixed’s Overview: How Context Engineering is Transforming AI Accuracy and Usability

Understanding Context Engineering in Modern AI

Context engineering refers to the strategic practice of supplying AI models with relevant background information and external data sources to improve the precision and relevance of their responses. Unlike prompt engineering, which focuses on crafting precise instructions, context engineering incorporates broader context from tools, memories, and updated data, typically outperforming prompt tweaks alone for complex tasks.

The Role of Retrieval Augmented Generation (RAG)

- RAG Explained: AI models access fresh, external data beyond their original training to generate up-to-date, accurate answers.

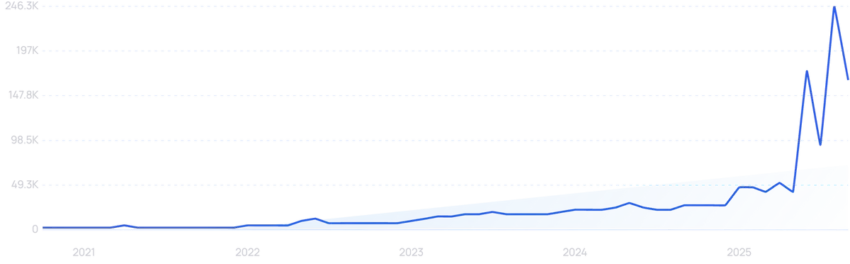

- Market Impact: RAG has grown rapidly, reaching $1.2 billion in 2024 with projections of $11.2 billion by 2030.

- Adoption: Over half of enterprise AI implementations use RAG, exemplified by OpenAI’s ChatGPT 5 which prioritizes retrieval over sheer training knowledge.

Long-Context LLMs: Benefits and Limitations

While AI models have dramatically increased their context windows—from GPT-3.5’s 4,000 tokens to Gemini 1.5 Pro’s 2 million tokens—bigger isn’t always better. Performance can decline sharply beyond certain context lengths due to distractions, hallucinations, or conflicting information. Moreover, processing vast context incurs latency and cost, making RAG’s focused retrieval of relevant snippets more efficient for accuracy and speed.

Hybrid Architectures: The Best of Both Worlds

Experts advocate combining long-context capabilities with RAG systems to optimize AI output. This hybrid approach allows models to handle extensive context when needed while leveraging targeted retrieval to maintain precision and reduce resource consumption. ChatGPT 5’s design choices exemplify this trend by reducing context size reliance and enhancing retrieval mechanisms.

Top AI Tools Supporting Context Engineering

- ChatGPT: Uses projects, system-level instructions, memory, and external connectors to build dynamic, structured context environments.

- Claude: Offers workspace projects with automatic switching between large context windows and RAG, plus memory features for task-specific context isolation.

- NotebookLM: A RAG-focused tool ideal for multi-document research, enabling context-aware summaries like videos, podcasts, and mind maps.

- TypingMind: Provides flexible LLM integration with customizable knowledge bases, plugin control, and app connectors for precise context management.

- Perplexity: Supports mixed local and web-based sources within workspaces, allowing seamless referencing of both static files and live documentation.

The Future of AI Context: Integration and Optimization

The evolution of AI suggests a convergence where prompt engineering and context engineering coexist as complementary strategies. Simple, targeted prompts serve quick, one-off queries, while rich, multi-source context engineering supports complex, recurring tasks. By blending these approaches, AI models achieve superior understanding, relevance, and adaptability across use cases.