TL;DR Summary of Meta Introduces AI-Powered Audio Translations with Lip Sync for Reels

Optimixed’s Overview: How Meta’s AI-Driven Audio Translation is Revolutionizing Social Media Content Accessibility

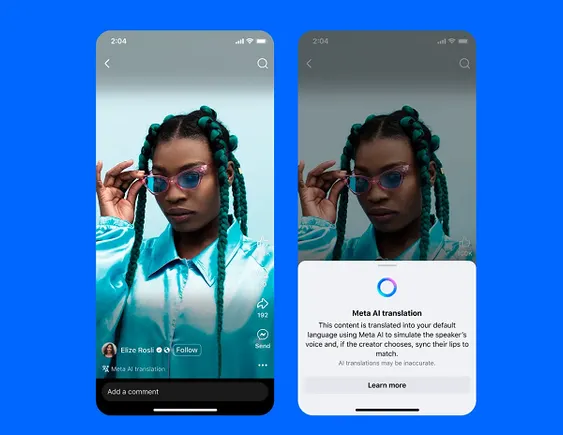

Expanding Reach with Natural-Looking Audio Translations

Meta has introduced an innovative AI translation feature that goes beyond simple subtitles by providing audio translations with synchronized lip movements on Facebook and Instagram Reels. This cutting-edge technology uses the creator’s original voice tone and sound, making the translated content feel authentic and engaging for viewers who speak different languages.

Key Features and User Controls

- Language Support: Currently launching with English-Spanish translations, with plans to add multiple languages soon.

- Toggle Options: Creators can enable or disable audio translations and lip-syncing within the Reels composer interface.

- Review Process: Before publishing, creators can review and approve AI-generated translations to maintain content quality.

- Audience Experience: Viewers see translated reels in their preferred language, with the option to control translation visibility.

- Multiple Audio Tracks: Facebook Page managers can add up to 20 manually dubbed audio tracks, broadening accessibility without lip-sync.

Best Practices and Limitations

To maximize translation quality, creators should avoid overlapping speech and minimize background noise or music. The system supports translations with up to two speakers for the most accurate lip-sync results. While AI translations enhance accessibility, building a following in a new language may take time and patience.

Who Can Access This Feature?

Facebook creators with Professional Mode enabled and over 1,000 followers, along with Instagram creators in supported regions, can activate these AI translation features via the latest app updates. This advancement marks a significant step toward breaking down language barriers and fostering global connections through social media.