TL;DR Summary of Understanding the True Nature of AI Language Models

Optimixed’s Overview: Why AI Language Models Are Statistical Predictors, Not Knowledge Engines

The Core Reality Behind AI Responses

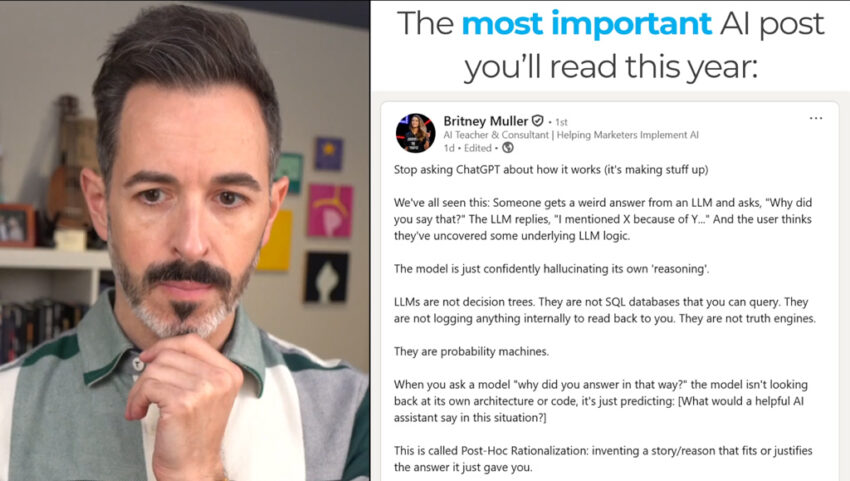

Many users mistakenly believe that AI systems like ChatGPT explain their answers by revealing their sources or internal logic. In truth, these models do not possess self-awareness or access to their neural network workings. Instead, they function as statistical lotteries, predicting the next token based on probability distributions learned from vast text data.

Key Points About AI Language Models

- Not Databases: LLMs don’t store or retrieve factual data like a SQL database; they generate answers dynamically based on token probabilities.

- Hallucinated Explanations: When asked why they gave a certain answer, models fabricate plausible-sounding reasons because they can’t introspect their decision-making processes.

- Retrieval Augmented Generation (RAG): Some models integrate external information (e.g., search engine results), but still rely on probabilistic token selection to produce text.

- Variability in Answers: The same question posed multiple times can yield different responses, demonstrating the randomness and subjectivity in token prediction.

Implications for Users and Professionals

Recognizing that AI language models are fundamentally probability-based autocomplete machines helps users set realistic expectations about their capabilities and limitations. Misunderstanding this can lead to misplaced trust, flawed decision-making, and overreliance on AI-generated explanations or facts.

For anyone leveraging AI in professional or personal contexts, a critical, informed perspective on how these models operate is essential to harness their power responsibly and effectively.